Challenges and visions

When companies first employ generative and agent AI, they usually start with low-risk use cases. However, in highly regulated industries like healthcare, even seemingly simple management tasks pose inherent risks.

This is because many core processes, such as recording maintenance and payment adjustments, require access to human monitoring and personally identifiable information (PII) and protected health information (PHI). This makes AI-enabled automation much more complicated in healthcare than in other industries.

Despite these challenges, healthcare organizations can leverage advances in AI, including generation and agent AI, to promote the next level of automation, by implementing a framework that prioritizes responsible use, compliance and continuous innovation.

Integrating AI in healthcare

The healthcare industry is not used to automation. To reduce costs, serve more patients and provide better results, health organizations have long relied on software solutions to automate repetitive tasks, including warning processing teams of errors in billing.

However, it was not so much possible to automate more complex or subtle processes, such as verifying and solving that error. These types of tasks typically require a level of cognitive processing and inter-departmental coordination that cannot be supported by traditional automation frameworks. These tasks may also lack clear and consistent rules or require time-sensitive decisions that require human intervention.

This is being altered by new AI features, such as Agent AI: Autonomous systems that allow for aggressive and adaptive actions. Agent AI systems mimic human cognitive processes and enable the automation of complex, valuable healthcare tasks that require flexibility, contextual judgment, or collaboration between different groups.

Unlock the next era of automation in healthcare

Integrating AI and automation solutions involves piloting an AI-enabled desk-level procedure assistant that can automate complex, repetitive tasks that are not managed by traditional software.

In phase 1, assistants act as augmentation tools. Human agents can be supported by automating actions such as obtaining procedural information and checking provider notes within the system. Agents can also serve as guides and can also provide human employees with recommendations or step-by-step instructions on how to take actions, such as updating provider records or approving changes.

Phase 2 features allow human agents and AI agents to reverse roles. AI agents take the initiative and actively perform tasks such as requesting record change requests for routing providers, modify requests to the appropriate department, validate requests, and update records. Human agents maintain monitoring of the entire process.

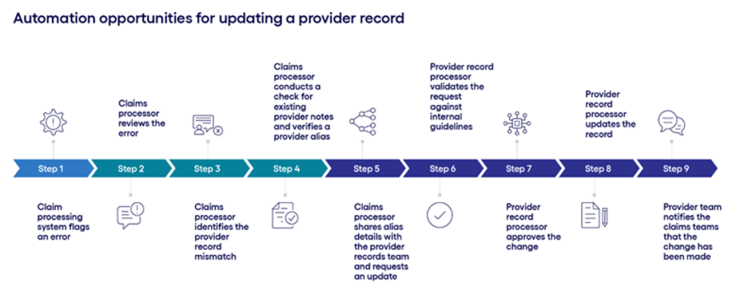

Figure 1 outlines how an organization uses AI assistant to update provider records. Depending on whether you are using a version of this agent with phase 1 or phase 2 functionality, your organization can selectively automate some of the workflow. As a result, what once was a nine-stage human-centric workflow can now be streamlined to five or six steps for human agents with minimal involvement.

Instead of a human care manager manually reviewing case notes, prescription history, and self-report progress, in order for an AI agent to retrieve this data from multiple sources and summarise this data in a clear, consumable format using generated AI, the AI agent retrieves this data from multiple sources.

This not only reduces practical data collection and analysis time, but also allows case managers to interact with information. For example, care managers can ask follow-up questions directly within the platform, request more information, or use prompts to view some of their patient profiles.

Building a strong foundation for AI evolution in healthcare

As AI continues to evolve, healthcare organizations will need to develop frameworks that can leverage technology advancements while adhering to critical regulations. To do this, there are three important steps to building an AI foundation that prioritizes responsibility, compliance and innovation.

1. Accept responsible AI practices from the start.

Before considering any particular industry regulations, healthcare organizations must first embrace responsible AI practices that build trust in technology and its applications. This requires continually questioning how the use of generator or agent AI raises privacy or security issues that require legal review and process adaptation.

For example, another agent use case that our team is working on includes voice-to-text transcription of intake ratings and the use of GEN AI agents summarizing calls. While this application offers incredible time savings, it also raises important legal and compliance questions that your organization may not expect first. For example, patients may have consented to call records, but sending those files to AI tools could introduce third-party intermediaries and require additional permissions.

This scenario highlights the need to assess AI integration not only from a functional perspective, but through the lens of consent management, legal risk, auditing and intellectual property.

2. Take advantage of data search and role-based access standards for AI agents and tools.

Integrating agent AI into your healthcare workflow is not just about building the technical capabilities of your AI assistants, but also about safely and securely managing model access to PII and PHI.

For example, if an AI agent is trying to access a patient's medical record and create an overview of a care manager, it must adhere to the same hierarchical role-based access control (RBAC) as the person initiating the request. This does not necessarily require creating AI agent-specific RBACs from scratch, but companies must incorporate existing controls into AI tools from software or solution providers, provider API ecosystems. This ensures that all data access is validated and appropriate.

Conclusion: Enables the next level of productivity and efficiency

The use of AI within healthcare is on a clear orbit. From augmentation tools for human workers to semi-autonomous agents who need human surveillance. As AI tools improve accuracy, AI agents can self-learning completely independently and may completely question the future role of traditional software.

This rapid rate of change underscores the need for healthcare institutions to approach AI journeys with urgency and accuracy. Early adopters will begin to build essential security and legal frameworks, while also potentially impacting policy and product decisions, bringing organizations to lead an AI-driven future.